Microservice, or Microservice architecture is not a special technology anymore. There are lots of project which will help build-up micro-service quickly, and major cloud service provider is offering options for this too. And engineers are starting to think the efficiet ways to offer/use micro-service based system.

API Gateway and BFF(Back-end for Front-end)

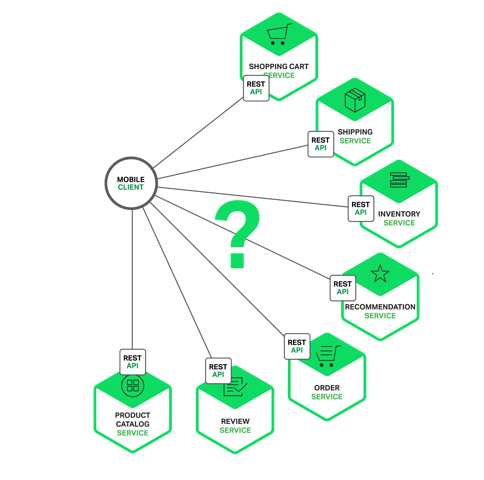

Basically, microservice is composed of multiple services. It means that all of them are included in different machines with different hosts.

When calling API, you need to setup different host to get information from different domain. Moreover, you need to check authentication data(such as token) everytime before requesting.

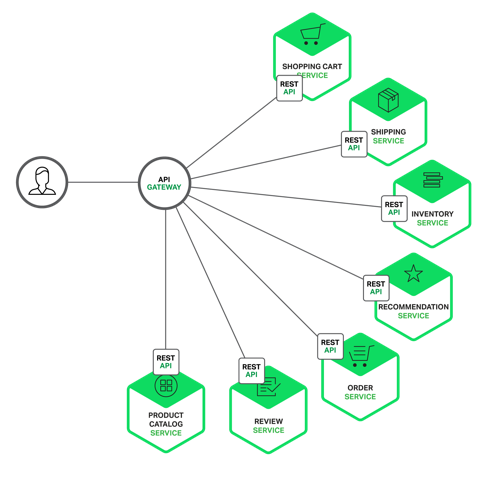

Gateway is not just making single request point. It encapsulates the internal system, and make single interface which can work as ‘gate’ of total infrastructure. Service only needs to handle lot of parts related with authentication, load balancing, caching, and more inside this gate.

Of course, there are some problems.

- Because all API reqeust are coming through this gate, it causes bottle-neck issue.

- Different type of devices could require different data, but it cannot cope with it flexable. How they can do is to make a new(or fix current ones) API in gateway.

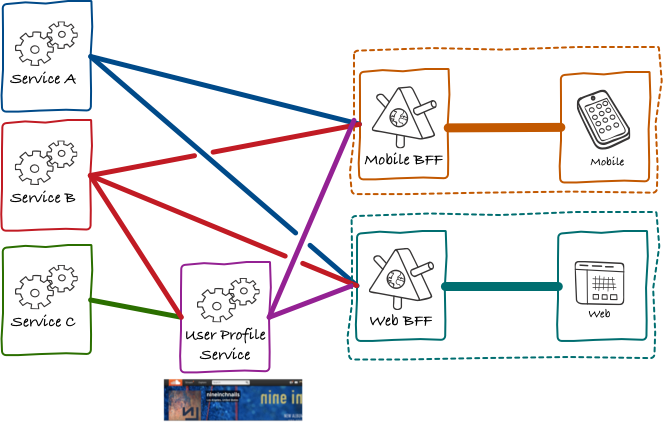

So some smart engineers in SoundCloud make a new approach for this, and named as BFF(Back-end for Front-end) pattern.

This is probably the most famous diagram which explains BFF pattern. Instead of designing API in gateway to handle every cases(or devices), make separate ‘gateway’ for different type of clients(web, mobile).

Actually, these 2 kinds of pattern are logically similar. It is to make layer in the middle between client and server, to arrange the request and handle common functions in single point.

If you want to know more, please take a look.

Proxy for Express Framework

Most simple design for BFF(or Gateway) is to use proxy. There are good open source module http-proxy-middleware for NodeJS user to implement it with ease.

|

|

In this case, every API requests which includes /api/v1/order will be redirect to microservice with domain https://order-service-host/. If we need to modify request or response data, we can do it by default middleware offered by this module.

For example, if you want to add ‘Bearer’ token for every request, it can be done as:

|

|

or you could want to send error logs to ELK for alert system. Do it like:

|

|

Bit advanced logic for better performance

There is some case to avoid using proxy for better performance.

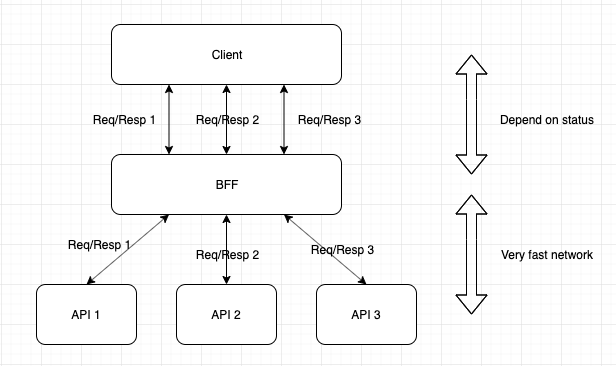

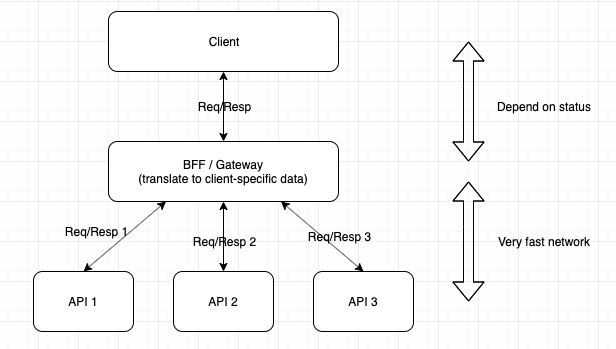

As in image, there can be a case which needs to get data from multiple domains, and response from ‘API-1’ and ‘API-2’ is useless for rendering in client. It means there are ‘waste of http request/response’ on client. This is usually very small, but it can be very big.

Moreover, if your service are hosted by cloud computing service provider, network performance will be stable(and fast!) between microservices if they are in same cluster. But between BFF and client, network speed will be highly effected by where user are, and if client is in place with poor network signal, this ‘waste of http request/response’ will effect more to the service performance.

This is bit advanced flow to reduce request/response size and(or) number between client and BFF. But using proxy, we cannot change the flow by status.

So for example if we want to find shipping status of the order, and need to call API from ‘order’ and ‘shipping’ to domain(assume client will call /api/v1/bff/shipping-of-order for this…), we can go on as:

|

|

As you can see, BFF side code has been bigger, and logic has more complexity because we need to check data, and return value by status in person. But if there are cases to check status from multiple domain and client needs only few data from final result, this could make user more happy.

Reference

- https://microservices.io/patterns/microservices.html

- https://philcalcado.com/2015/09/18/the_back_end_for_front_end_pattern_bff.html

- https://www.nginx.com/blog/building-microservices-using-an-api-gateway/

- https://docs.microsoft.com/en-us/azure/architecture/patterns/backends-for-frontends

- https://tsh.io/blog/design-patterns-in-microservices-api-gateway-bff-and-more/

- https://fullstackdeveloper.tips/seeing-the-bff-pattern-used-in-the-wild